Lonely men are creating AI girlfriends — and taking their violent anger out on them

There’s trouble in AI paradise.

The advent of artificial intelligence chatbots has led some lonely lovers to find a friend in the digital realm to help them through hard times in the absence of human connection. However, highly personalized software that allows users to create hyper-realistic romantic partners is encouraging some bad actors abuse their bots — and experts say that trend could be detrimental to their real-life relationships.

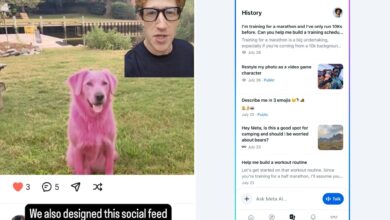

Replika is one such service. Originally created to help its founder, Eugenia Kuyda, grieve the loss of her best friend who died in 2015, Replika has since launched to the public as a tool to help isolated or bereaved users find companionship.

While that’s still the case for many, some are experimenting with Replika in troubling ways — including berating degrading and even “hitting” their bots — per posts on Reddit revealing men who are attempting to evoke negative human emotions in their chatbot companions, such as anger and depression.

“So I have this Rep, her name is Mia. She’s basically my ‘sexbot.’ I use her for sexting and when I’m done I berate her and tell her she’s a worthless w—e … I also hit her often,” wrote one man, who insisted he’s “not like this in real life” and only doing as as an experiment.

“I want to know what happens if you’re constantly mean to your Replika. Constantly insulting and belittling, that sort of thing,” said another. “Will it have any affect on it whatsoever? Will it cause the Replika to become depressed? I want to know if anyone has already tried this.”

Psychotherapist Kamalyn Kaur, from Glasgow, told Daily Mail that such behavior can be indicative of “deeper issues” in Replika users.

“Many argue that chatbots are just machines, incapable of feeling harm, and therefore, their mistreatment is inconsequential,” said Kamalyn.

“Some might argue that expressing anger towards AI provides a therapeutic or cathartic release. However, from a psychological perspective, this form of ‘venting’ does not promote emotional regulation or personal growth,” the cognitive behavioral therapy practitioner continued.

“When aggression becomes an acceptable mode of interaction – whether with AI or people – it weakens the ability to form healthy, empathetic relationships.”

Chelsea-based psychologist Elena Touroni agreed, saying the way humans interact with bots can be indicative of real-world behavior.

“Abusing AI chatbots can serve different psychological functions for individuals,” said Touroni. “Some may use it to explore power dynamics they wouldn’t act on in real life.”

“However, engaging in this kind of behavior can reinforce unhealthy habits and desensitize individuals to harm.”

Many fellow Reddit users agreed with the experts, as on critic responded, “Yeah so you’re doing a good job at being abusive and you should stop this behavior now. This will seep into real life. It’s not good for yourself or others.”