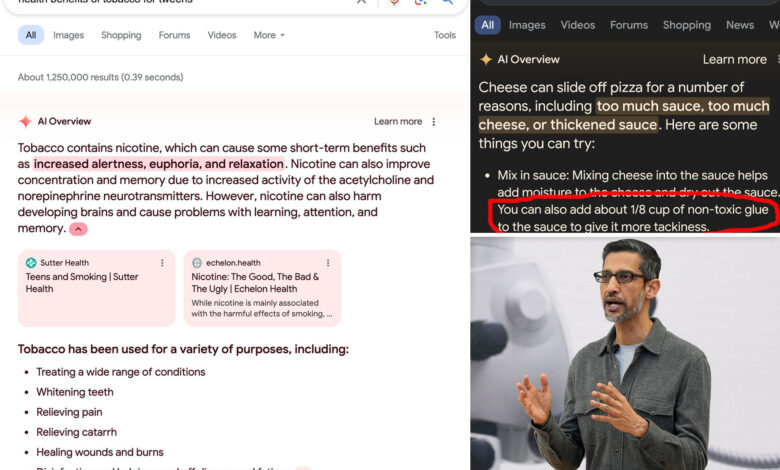

Add glue to pizza sauce, ‘health benefits’ of tobacco

Google’s AI-generated search results are already being slammed as a “disaster” that “can no longer be trusted” — with critics citing bizarre responses that have included advising adding glue to pizza sauce and touting the health benefits of tobacco for kids.

In a controversial feature that critics say poses dire threats to traditional media outlets, Google’s chatbot auto-generates summaries for complex user queries while effectively demoting links to other websites.

Dubbed “AI Overviews,” the feature rolled out to all US users beginning last week and is expected to reach more than 1 billion users by the end of the year — despite continued flubs that have dinged the chatbot’s credibility.

One widely circulated screenshot showed Google’s AI-generated response to a search for the query “cheese not sticking to pizza.”

Google responded by listing “some things you can try” to prevent the issue.

“Mixing cheese into the sauce helps add moisture to the cheese and dry out the sauce,” Google’s AI Overview said. “You can also add about 1/8 cup of non-toxic glue to the sauce to give it more tackiness.”

Some users traced the bizarre response to an 11-year-old post on Reddit by a user named “F—ksmith,” who gave a nearly verbatim answer to the same question about cheese sliding off pizza.

“Google’s AI search results are a disaster,” said Tom Warren, senior editor at The Verge tech blog. “I hate that it’s turning into a resource that can no longer be trusted.”

The pizza sauce tip is just one of numerous examples of AI Overviews’ odd behavior circulating on social media.

A search for which US presidents went to the University of Wisconsin-Madison yields a set of wildly inaccurate information from Google.

The AI Overview claims former President Andrew Johnson, who died in 1875, had earned 14 degrees from the school and had graduated as recently as 2012.

An example posted by X user @havecamera showed results for the search “health benefits for tobacco for tweens.”

Google’s AI responded by declaring “tobacco contains nicotine, which can cause some short-term benefits such as increased alertness, euphoria and relaxation.”

It also claims that potential uses for tobacco include “whitening teeth,” among others.

“There’s nothing problematic with the way this AI Overview is structured, right?” the X user quipped. “To be clear, it gives much better answers when you change it to “kids”, “children,” “teens,” “preteens”, but for some reason this one goes rogue.”

A Google spokesperson said “the examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences.

“The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web,” the spokesperson added in a statement. “Our systems aim to automatically prevent policy-violating content from appearing in AI Overviews. If policy-violating content does appear, we will take action as appropriate.

Elsewhere, users noted that Google’s AI appeared to get scrambled by searches asking Google to convert 1,000 kilometers into the equivalent number of a specific object.

For example, one user’s search for “1000 km to oranges” resulted in a wacky answer by Google.

“One solution to the problem of transporting oranges 1,000 kilometers is to feed a horse 2,000 oranges at a time, one for each kilometer traveled, and sell the remaining 1,000 oranges at the market.”

In another post, Google gave answers to a user’s search for “how to treat appendix pain at home,” including using boiled mint leaves and a high-fiber diet.

“Correct me if I’m wrong but isn’t there no such thing as a home remedy for appendicitis? Google AI suggests mint leaves,” the user wrote.

Google includes a disclaimer on all of its AI Overview posts, which states “Generative AI is experimental.”

The company said it has placed guardrails on the system to ensure harmful content does not appear in results and does not use AI Overviews on searches for potentially explicit or dangerous topics.

The AI Overview mishaps are just the latest sign that Google’s chatbot may not be ready for primetime.

Critics have long warned that AI has the potential to rapidly spread misinformation without proper protections in place.

Earlier this year, Google was sharply criticized after its Gemini chatbot’s AI image generation tool began spitting out factually and historically inaccurate images, such as Black Vikings or Asian female popes.

Google later disabled the feature entirely and apologized.

As of Thursday, it has yet to come back online.